How long will it take Artificial Intelligence to write coherent essays to a prompt of your choosing? What about writing passable fiction? Plausible poetry? And what will it mean for society when it does? This is not merely the stuff of Black Mirror style speculation. Thanks to OpenAI’s new text prediction algorithm, named GPT 2 (short for “Generative Pre-Training Transformer”), that day may not just be coming – it may already be here.

In February, OpenAI, a nonprofit Artificial Intelligence research organization founded by Elon Musk and Sam Altman, released a technical paper describing their latest breakthrough on a text prediction algorithm, which they call GPT 2. The algorithm, which has been trained on a corpus of some 8 million websites and online comments, learns to predict what words are likely to come after other words. This may not seem momentous, but a sufficiently powerful predictor is indistinguishable from a generator – simply allow the algorithm to run, and it won’t just finish your sentence, it’ll write the next one, and the next after that, then the paragraph thereafter, for as long as you choose.

What makes GPT 2 different from existing text prediction services, like the Autocorrect on your phone, is its quality and coherence when writing long texts. For example, when fed the (human written) prompt “For today’s homework assignment, please describe the reasons for the US Civil War,” GPT 2 writes:

It is easy to identify why the Civil War happened, because so many people and so many books and so much television and films tell us that it was the cause, that it has something to do with race or economics or religion. There is much agreement that it was essentially a war of slavery on behalf of capitalism, about a century of slavery. But that’s not what most people think of when they think of the Civil War. Many people think of the war as about states rights. You’re not wrong about that, but it’s kind of misleading to say that the Civil War was a conflict between states’ rights and federalism. So let’s try again. What’s the idea that has inspired so many people to go onto the battlefields of Gettysburg and Antietam and Gettysburg and Petersburg and Fredericksburg? The American idea of the republic–a notion of limited government–is a great part of the history.

Clearly this level of writing is unlikely to win any awards for the quality of its prose. But what is remarkable is that nobody taught the algorithm this factual knowledge, let alone any particular rules of grammar, sentence structure or style. Instead, it has learned on its own the stylistic hallmarks of a homework assignment, and independently judged that a question on the origins of the American Civil War should include a discussion of state’s rights versus slavery, as well as mention famous battles like Gettysburg and Antietam. It observed a sufficient amount of human-produced writing to be able to independently create something which, at a glance, could pass for the work of a distracted middle school student.

And this is true not just of writing essays, but for writing fiction and even poetry, as well. When prompted with Allen Ginsburg’s Moloch, it continues the poem for several stanzas, asking the rather uncanny question “Why do men scream so loud?”

Moloch who left me in the glacier! Moloch who helped me to judge my behavior! Moloch who lured me out of the eternal anguish. Moloch who makes all my faults my undoings! Moloch whose deep conversation filled my soul with love and consolation! Moloch who curls up my brains and crumps my heads and screams my scream! Moloch who prepares oil in my hands and burns me alive! Moloch who cries out, “Why do men scream so loud?” Moloch who unleashes his poison in all rivers at high tide! Moloch whose ruins are filled with its cubits! Moloch who fiddles at insidious clockwork!

A Learning Machine

Perhaps the most intriguing thing about GPT 2 are the things it has learned along the way. The OpenAI team describes how, when fed a long text followed by “tl;dr” (short for “too long; didn’t read” an informal request for something to be made simpler and shorter) the algorithm has learned how to summarize the text in question. Again, nobody designed GPT 2 to work as a summarizer, and no one programmed it to even understand what a summary is. In spite of that, it appears to have learned on its own what should follow the phrase “tl;dr” and present somewhat coherent summaries of them on demand.

Similarly, GPT 2 was never trained as a translation service, and its training corpus was composed only of English texts. Yet it appears to have encountered enough isolated French phrases in these English texts to have deduced the existence of French, and to make a fair stab at translating it. It is quite poor at translating from English to French, as one would expect, but fair at translating French to English, although it cannot yet compete with dedicated translation software. But what is surprising is that it can translate at all, given that it was never programmed to do so, nor even to recognize the existence of multiple languages in the first place. It learned all this, unsupervised, simply from examining its corpus.

Too Dangerous for Distribution?

Garry Kasparov plays Deep Blue in 1996. Photo: Public Radio International.

It is precisely this learning capacity that makes GPT 2, or its eventual descendants, so dangerous. GPT 2 is not yet capable of writing as well as an average person. OpenAI researchers admit, for example, that it only generates answers as coherent as those above about 50% of the time. But it it should surprise no one if, in a very short time frame, the quality of its writing substantially increases. For decades, computers couldn’t come close to the level of required of professional chess – until Deep Blue beat Gary Kasparov in 1996, ushering in an era where artificial intelligence truly began to outmatch human intelligence. After humans lost chess to computerized dominance, Go was considered too complex for software. Yet in a few short years Go programs went from childlike to professional level to defeating Lee Sedol, the best player in the world.

Now, only three years later, the next generation of Go playing machines are so far beyond human skill that it is no longer worth a contest. GPT 2 represents a significant leap in ability for text prediction and generation, but there is no reason to expect it to stop now. How long until it writes as well an average adult? And how much longer before it can perform as well or better than professionals on most linguistic tasks? And when it does, what sort of world will we have built for ourselves?

To its credit, OpenAI seems quite sensitive to the possibility that this technology may be misused, which has led it to take the unusual step of releasing only a limited tech demo to the public. The ability to produce plausibly human level comments could have significant impacts on social media, allowing malicious actors to generate deceptive political claims, product reviews, or comments far more easily and cheaply than they can now. In the researcher’s own words, even now GPT 2 could be used to“Impersonate others online”, “Automate the production of abusive or faked content to post on social media”, or “reduc[e] the cost of generating fake content and waging disinformation campaigns.” The misuse of algorithms like GPT 2 could have major repercussions socially, politically, and economically- not in some distant future, but today.

Fake News generated on demand by GPT 2.

The Future of Work

It may be that the most severe consequences of this technology will not be its misuse, but its widespread adoption and use-as-intended.These sorts of AI ‘helpers’ could revolutionize fields like entertainment and education, and even Human Resources. With Speech-to-Text algorithms, partnered with automatic summarization, why should students attend lectures in person when they can simply automate the note-taking process? For that matter, why even employ lecturers, when textbooks can generate their own summaries trivially easily? Self-driving cars have long been upheld as an emblem of the changes that automation will bring to the workforce, but how many jobs could be displaced when your laptop can generate bespoke human-level prose at will? Journalism, entertainment, and advertising seem like obvious candidates for automation, but the much of the work humans do in offices is linguistic and routine in nature.

Admittedly an algorithm like GPT 2 may lack the flexibility to handle multiple tasks as well as a human worker, but that isn’t the major problem. The real issue would arise when an office of four people armed with text generation algorithms can outperform an office of twenty without – at which point, why pay for twenty employees? This is a real possibility, not in fifty or sixty years, but in just a decade or two.

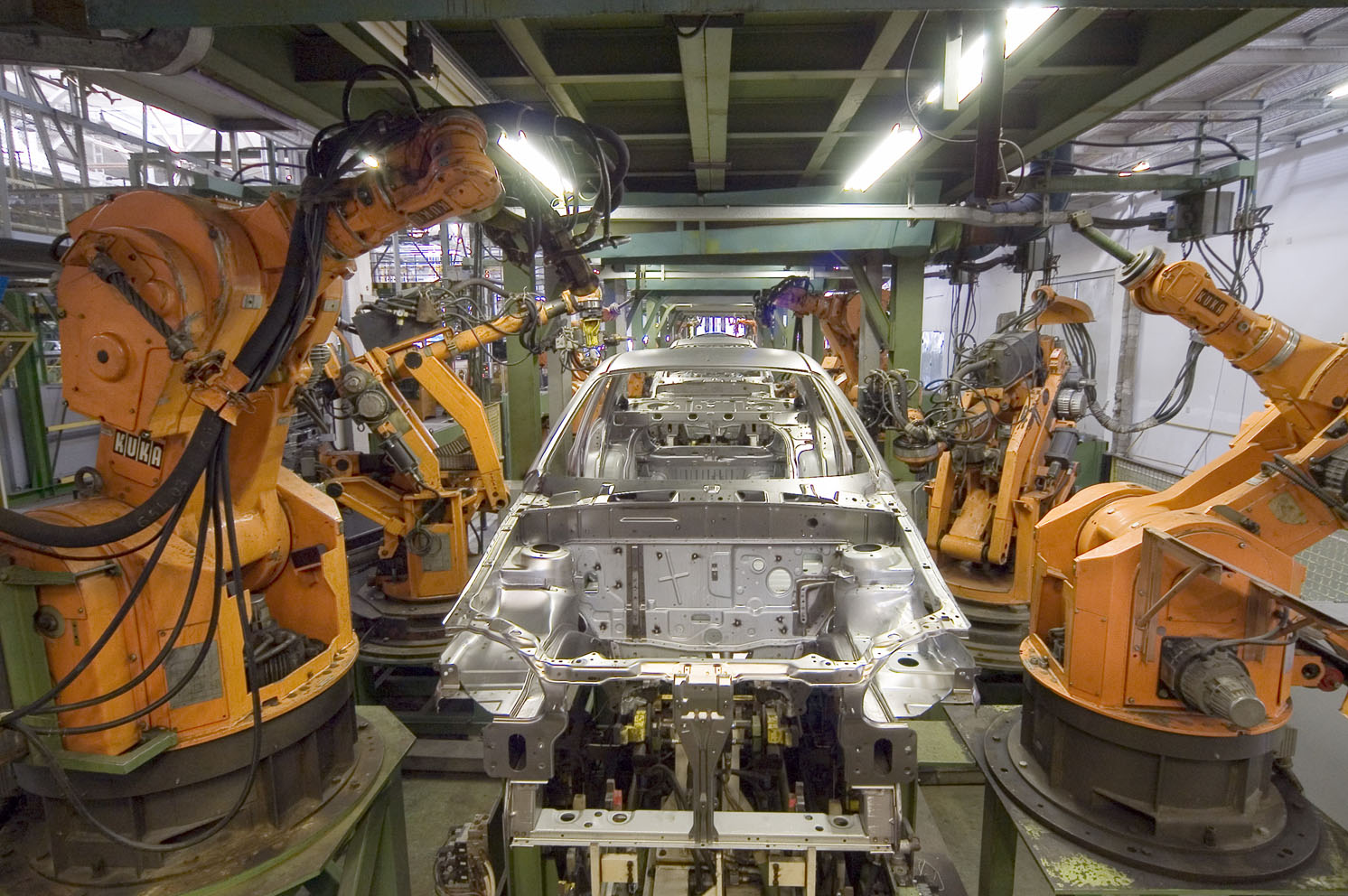

Of course, the automation of jobs is not a new problem. Optimists point to a heartening track record of human adaptability as evidence that when faced with new and disruptive technologies, people adapt. When the introduction of automobiles put horse carts out of business, horse riders and cart drivers moved on to other fields. Similarly, the number of people employed in agriculture shrank dramatically within the last century. Yet these displaced farmers didn’t exit the workforce. Instead, they began working in industries unimaginable in 1900. They became computer programmers, jet pilots, film or even YouTube stars. Therefore, we can expect those losing their jobs to automation and artificial intelligence in the next several decades to undergo an analogous process, relocating into other job sectors.

GPT 2’s Persuasive Writing

However, there also exists a real possibility that this time is different. Few can argue that any period in history saw so many changes, in so many unrelated fields, as we expect to see soon. The breadth and pace of technological change is unprecedented in history. Increasingly automated factories have already resulted in the loss of many millions of jobs, gutting many segments of society. How much worse, then, when automated warehouses replace their workers, when truck fleets replace their millions of drivers, and when writers of all sorts can be replaced by the likes of GPT 2? The simple question is, what kind of work will require human attention and skills in the future, and will there be enough of it to sustain a society? And what kind of job training program could transition millions of people from jobs they know to these imagined future positions?

It is possible that Artificial Intelligence may erode the socio-economic foundations of our civilization, rendering tens of millions of people economically irrelevant. Of course, the opposite is certainly possible – perhaps GPT 2 and other such innovations will increase the productivity of the world’s workers and offices so that meaningful work for anyone who wants it will be preserved. But that future will not happen by accident. Some political and economic thinkers are already anticipating the changes that are coming, and considering how to offset them, such as the increasingly popular calls for a Universal Basic Income.

It is clear that great challenges lie ahead, but it is also clear that citizens must act now to build the future they want, not merely accept that which is handed to them. These are interesting times, and our society faces serious problems. But we should all remember that serious problems require ambitious solutions, and the future may be written in code, but it is not written in stone.

- Would Education Obsessed Korea Really Cut Elite Schools? - January 9, 2020

- Is Korean food going foreign? - November 12, 2019

- A Fragmented Future? Huawei and the Future of the Global Economy - June 13, 2019

1 Comment

Tracy Gardner

6 years agoVery interesting stuff! This type of technology is entering education in the automated scoring area. Looking forward to discussing more.

Comments are closed.